Technology

Sora AI is Every Content Creators Dream. Its Almost Here!

Table of Contents

OpenAI’s Sora

Sora is the Japanese word for sky, our blue expanse that is often associated with limitless dreams and possibilities.

You may have heard that OpenAI is also releasing an AI video generator called Sora AI. With It’s fantastical visuals and life-like video, it’s without a doubt the top 5 AI technologies in 2024.

OpenAI recently launched Sora’s first short video, “Air Head”, and if it proves anything, its that Sora is every content creator’s dream turned reality.

But if you’re not convinced, perhaps this video might help. Here’s a little game called, “can you spot the AI video”?

How Can Sora AI Help Content Creators?

Video producers, film makers, animator, visual artists, and game developers all have one thing in common: They are always looking for the next big thing in creative expression. Sora AI is a tool that can greatly enhance the ability content creators have to fuel their imagination and connect with their audiences.

A misconception is that AI is going to replace human artists, videographers, and animators. But if Sora’s first short film has shown anything, its that a team was still needed to create the story, narrate the scenes, and edit the videos to create the final production.

Sora won’t replace artists; it will equip them with tools to express their artistry in different ways.

Sora’s First Short Film

Auteur and Toronto-based multimedia company, Shy Kids, was granted early access to Sora AI. Shy Kids is among the few granted early access to the AI video generator for the sake of testing and refining it before launch. The video the artists generated using Sora AI is called “Air Head”.

Pretty mind-blowing to think that one day, we might be able to create an entire movie with the main character as a balloon. Think of the comedies we can create.

How Does Sora AI Work?

Sora’s first short film “Air Head” shows that Sora AI is the most advanced AI-powered video generator tool in history. Sora creates realistic and detailed 60-second videos of any topic, realistic or fantasy. It only needs a prompt from the user to build on existing information and develop whole new worlds.

What We Know So Far

Sora AI is a new technology with limited access. There’s a strategic reason to limit information of a new technology, and it’s to manage the publics expectations while polishing the final product. Sora is a very powerful tool. It might be necessary to have strong safeguards and build guidelines before releasing it. Here’s what we know so far.

Sora Release Date

OpenAI has not provided any specific release date for public availability or even a waiting list. However, many sources indicate that it may be released in the second half of 2024. Currently, Sora AI is only being made available to testers called “red teamers”, and a select group of designers—like Shy Kids— have been granted access.

Sora Price

Open AI has not yet released a price for Sora AI and has made no comment on whether there will be a free version like its other AI models. Based on other AI text-to-video generators, its likely that there won’t be a free version, and that Sora will offer a tiered subscription model that caters to users who want to dish out videos regularly.

There is also a possibility of a credit-based system, similar to its competitor RunwayML. A credit-based system is where users purchase credits, and each credit is used for a specific task related to generating a video.

Sora’s Video Length

OpenAI has said Sora can generate videos of up to a minute long with visual consistency. Scientific America states that users will be able to increase the length of the video by adding additional clips to a sequence. Sora’s first short film “Air Head” ran for a minute and twenty seconds, which indicates that Sora’s video length can be anywhere between 60-90 seconds.

Sora’s Video Generation Time

OpenAI has not revealed how long it will take Sora AI to generate a video; however, Sora will use NVIDIA H100 AI GPUs. These are GPUs designed to handle complex artificial intelligence tasks. According to estimates provided by Factorial Funds, these GPUs will allow Open AI’s Sora to create a one minute video in approximately twelve minutes.

How is Sora AI Different from Other Video Generators?

Many text-to-video generators have trouble maintaining visual coherency. They will often add visuals that are completely different for one another for each scene. This requires the videos to be further edited. In some cases, it takes longer to create the video you want by using AI than it does by creating it yourself.

Sora AI seems to surpass other text-to-video generators in the level of detail and realism it creates. It has a deeper understanding of how the physical world operates.

It Brings Motion to Pictures

Another feature that Sora AI has is its still-life photo prompts. Sora will be able to take a still-life photo, such as a portrait, and bring it to life by adding realistic movement and expression to the subject. This means that you can generate images using OpenAI’s DALL. E model, and then prompt it with the desired text of what you would like the image to do.

This is like something out of Harry Potter. One of the biggest worries is that Sora AI will be able to depict someone saying or doing something they never did. I don’t think the world’s ready for another Elon Musk Deepfake.

Will Sora AI Undermine Our Trust In Videos?

There are over 700 AI-managed fake news sites across the world. OpenAI is already working with red teamers—experts in areas of false content—to help prevent the use of Sora AI in a way that can undermine our trust in videos.

Detection classifiers will play a big role in the future of AI. Among these detection classifiers are tools that can detect AI in writing, and content credentials that show whether an image was made using AI within the contents metadata.

AI image generators like Adobe Firefly are already using content credentials for their images.

Why do Sora AI Videos Look So Good?

Sora AI generates it’s videos using ‘spacetime patches’. Spacetime patches are small segments of video that allow Sora to analyze complex visual information by capturing both appearance and movement in an effective way. This creates a more realistic and dynamic video, as opposed to other AI video generators that have fixed-size inputs and outputs.

One comment said Sora AI videos are like dreams, only clearer… That’s not a bad way to put it. Afterall, dreams are like movies our brains create, and anyone who increases their REM sleep will understand. But speaking of movies, how will Sora AI affect Hollywood?

Can Sora AI Replace Movies?

As amazing as OpenAI’s text-to-video generator is, it can’t replace actors and use them in a prolonged storyline, but it can help producers create some fantastic movies. Sora AI can be used to create pre-visuals, concept art, and help producers scout potential locations.

Pre-visualization: Sora can turn scripts into visual concepts to help both directors and actors plan complex shots.

Concept Art Creation: It can be used to generate unique characters and fantastical landscapes which can then be incorporated into the design of movies.

Location Scouting: Using the prompt description, Open AI’s Sora can expand on location options, and even create locations that are not physically realizable. An example would be a city protruding from a planet floating around in space (I sense the next Dune movie here).

IC INSPIRATION

Content creators have a story to tell, and fantastic content is often the product of a fantastic mind. Sora could transform how we share inspiring stories.

Just imagine for a moment how long it took to conceptualize the locations and characters needed to create a movie like The Lord of the Rings. How many sketches, paintings and 3d models they had to create until they got their “aha moment”, and finally found the perfect look for the movie.

I wonder how much time Sora AI can save film and content creators, and with it, how much money. If it is truly as intuitive as its appearing to be, then it could revolutionize the work of filmmakers, video creators, game developers, and even marketers.

A lot of campaigns are too hard to visualize. Take Colossal Biosciences as an example. They are a company that has created a de-extinction project to bring back the Woolly Mammoth. How on earth do you conceptualize the process of de-extinction in a campaign video without spending an enormous amount of money?

Sora could be just what the doctor ordered.

Technology

A Talking Lamp? Top 5 Unbelievable AI Technologies in 2024

Table of Contents

AI Technologies in 2024

The year 2024 is sure to go down in history as the era of Artificial Intelligence. New AI technology has reached an astounding level of accuracy and versatility. The possibilities are now endless.

In the last few months, new ideas and gadgets have been coming out at such a pace that it’s nearly impossible to keep up. Here’s a rundown of the top 5 unbelievable AI technologies in 2024 and beyond.

OpenAI: Sora AI

Open AI’s new Sora application can make realistic video footage based on your language prompt.

Sora’s work is based on a library of video samples. It’s been trained to associate these videos with certain words. For example, a user asks for a video of two pirate ships battling each other in a cup of coffee, then Sora can use those prompts to pinpoint existing videos of ships, battles, and coffee. It will study designs, movements, and concepts and use them to make an original and realistic masterpiece.

Open AI is being understandably cautious about releasing this new AI technology in 2024. They’ve only released it to a few developers so that they can fix and tweak any bugs. Nevertheless, this upcoming AI technology sends the imagination soaring.

Baracoda: BMind AI Smart Mirror

This upcoming AI mirror is supposed to increase its owner’s mental health and improve their mood.

The BMind AI Mirror was developed by health tech pioneer Baracoda. It uses Artificial Intelligence to scan its owner’s face, posture, and gestures. Then it makes an educated guess as to their current emotional state. It even asks them how they’re feeling!

Recently, a journalist at an AI conference tested the mirror out. When she stood in front of it, it began by asking how her day was going. To test it, she said her day was terrible. It then turned on a calming, blue light, offered words of encouragement, and even offered a meditation session.

This new AI technology is expected to be available to the public by the end of 2024, and with a price tag between $500-$1000.

Nobi: Smart Lamp

The one thing that most seniors fear is falling. They’re more prone to these kinds of events and are impacted by them more deeply than younger people.

That’s why Nobi, the age tech specialist, developed the Smart Lamp. This attractive ceiling light fixture is loaded with high-tech features. Its primary purpose is to prevent falls and provide critical instant help when falls occur.

It unobtrusively monitors the movements of anyone in its range. As soon as someone falls, the caregiver that is connected to the lamp is notified. They can then talk with their patient or loved one through the lamp to make sure they are okay.

After a fall, the device provides caregivers with security-protected pictures of the fall. Caregivers can analyze the pictures to see exactly what happened, allowing them to avoid falls in the future.

Pininfarina: OSIM uLove3 Well-Being Chair

There’s a new way to relax using AI technology in 2024. It’s a new chair that could find its way into the corner of your own living room.

Pininfarina is the Italian luxury firm famous for the design of the Ferrari. They’ve now released an amazing new AI smart chair that takes stress relief into new realms.

Everyone experiences stress in their own way depending on factors such as age, size, and activity level. When a user sits in the OSIM uLove3 Well-Being Chair, an AI-powered biometric algorithm measures heart rate and lung function. It uses this information to determine a body tension score. Then, it uses that information to provide a personalized system of massages and calming music.

This chair is already available for purchase. At around $7,999.00 USD, it doesn’t come cheap, but for such a cool thing, it may just be worth the price.

Swarovski: AX Visio Smart Binoculars

Bird watchers and other outdoor enthusiasts around the world will be excited to hear about Swarovski’s AX Visio Smart Binoculars.

Bird watching is a practice as old as humankind itself. It’s fun, relaxing, and easy to do. It’s hard to imagine that someone could have found a way to make it even better. Swarovski, the luxury crystal company, was up to the challenge. They’ve developed a fantastic new pair of binoculars that will make spotting much more exciting.

The binoculars use new AI technology to access an immense database of bird data from the Cornell Lab of Ornithology. Cornell is one of the premier bird research facilities in the world. It uses the database to identify the exact species of bird or mammal it’s focused on.

Work is ongoing to teach these binoculars how to identify everything: such as butterflies, mushrooms, and even stars.

Other New AI Technologies You Should Know About

2024 won’t be the only year to look forward to when it comes to Artificial Intelligence. Here’s a few other AI technologies that we’ve covered on inspiring click:

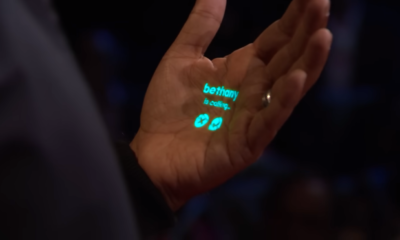

- The Human AI Pin: This is a wearable, standalone AI device that can do things like translate your speech into another language and monitor dietary choices. It is meant to replace your phone with something a little more invisible.

- Organoid Intelligence: Also known as OI. This is a new branch of AI that is creating the worlds first biological-computer using real brain cells.

- Smart Cane: The Smart cane turns ordinary walking canes into ones that can pair with smart phones; helping the visually impaired in incredible ways.

- iterate.ai Weapon Detection System: This is a free, open-source app that is offered to non-profit organization to help protect them. It can detect guns in schools and immediately inform the police.

- Google’s Articulate Medical Intelligence Explorer: This is Googles newest medical AI that runs diagnostics on patients. In the future, it may be used in clinics so that you can get in to see the doctor a lot quicker.

AI technology in 2024 is sure to make many things that we once thought of as fantasy into a reality. This leaves us wondering what inspiring innovations tomorrow will bring.

IC Inspiration

At the dawn of AI was the now famous ChatGPT. This was one of the earliest publicly available applications that allowed natural conversation between computers and humans.

Who knew it could help diagnose a young boy?

Alex started experiencing significant pain at the age of four when he tried jumping in a bouncy house his parents bought him. His nanny gave him pain medication which helped for a while, but then his symptoms started changing. He was having emotional outbursts. He started chewing things and seemed exhausted all the time. He couldn’t move his legs properly, seeming to drag the left one behind him like dead weight.

His parents took him to the doctor, and then another doctor. Some time past, and a variety of other doctors failed to come up with a diagnosis that satisfactorily explained all his symptoms.

His mother, Courtney, theorizes that this was because each specialist was only interested in or able to study their own area of expertise. His condition impacted so many areas of his life that no single specialist could get to the heart of the matter.

So, after 17 different doctors and one trip to the emergency room, Courtney tried a different approach. She asked ChatGPT.

“I went line by line of everything that was in his (MRI notes) and plugged it into ChatGPT,” she told Today.com. “I put the note in there about … how he wouldn’t sit crisscross applesauce. To me, that was a huge trigger (that) a structural thing could be wrong.”

And she was right.

Once ChatGPT knew everything there was to know about Alex’s condition and compared it to all the medical knowledge housed on the internet, “tethered cord symptom” was ChatGPT’s suggestion.

It’s a relatively rare medical condition related to the more commonly known Spina Bifida. Children who have it are born with their spinal cord attached to another part of the body, such as a cyst or a bone. It causes pain and limits a child’s freedom of movement.

Courtney took Alex to a new neurologist along with ChatGPT’s suggestion, and the doctor agreed with the computer.

Today, Alex has undergone surgery to correct his condition. He’s beginning to recover, much to his and his family’s tremendous relief. Soon, this sports-loving boy may be out on the field with his friends, again.

Experts still warn against the idea that ChatGPT can be an effective diagnostician.

It can only review and repeat what others have written and can’t think outside the box and come up with an original diagnosis. It’s still better to get a final diagnosis from a trained medical expert.

However, it’s an exciting thought that it can, in the very least, start us on the right path. It helped Alex find the treatment he deserves.

What a tremendous start to AI technologies in 2024.

Technology

Alef Model A Flying Car Pre-Orders Surge to a Whopping 2,850

Table of Contents

Alef Model A

Alef: the first letter in the Hebrew, Arabic, and Persian languages, is now the word behind the first flying car ever created.

The all-electric Alef Model A is set to hit airways in 2025. The new flying car— whose look was developed by Bugatti designer Hirash Razaghi— has recently received certification from the Federal Aviation Administration (FAA) to begin testing its flight capabilities, and pre-orders are currently surging.

CEO of Alef Aeronautics, Jim Dukhovny, was inspired to create a flying car after watching Back to the Future. Coincidentally, Alef Aeronautics started developing the car in 2015, the same year that the movie predicted we would have real flying cars.

Alef Model A Price

The Alef Model A is priced at $300,000 USD per vehicle, with pre-orders being taken right now. Buyers have the option between a general pre-order for $150 USD, or $1500 for priority queue.

According to Jim Dukhovny, 2,850 pre-orders have been placed so far. This represents more than $855,000,000 of revenue that Alef Aeronautics would make upon release. These numbers are expected to rise as the Alef Model A continues to wow audiences.

The new flying car made an appearance at the Detroit Auto Show, which resulted in a surge of pre-orders that has since increased.

How Does the Alef Flying Car Work?

The new flying car works like a conventional car that allows you to drive on streets, but it is also equipped with eight propellers, giving those who have it the ability to fly up vertically in traffic jams and then take off, leaving traffic behind—or underneath—them.

Flying Cars Can Solve the World’s Traffic Problems

In an era where some cities spend up to 156 hours a year in traffic jams, flying cars help relieve a congestion problem that researchers say needs to be addressed. In fact, drivers in major Canadian cities spent an average of 144 hours stuck in traffic in 2022 alone. This means that If traffic doesn’t get any better, a Canadian who remains a driver for the next 30 years could spend more than 150 days of their driving life stuck in traffic jams.

What is the Speed of the Alef Model A?

The flying car is a low-speed driving vehicle and allows for a speed up to 25 miles (40kpm) on the road. It has a driving range of 200 miles (322km) and a flying range of 110 miles (177km).

It is fully powered with electric energy, so besides emerging as a transformative way of reducing time wasted on congested roads, it is also emerging as an invention for sustainable transportation—much like Michelins UPTIS airless car tires.

Has the Alef Model A Actually Flown?

While prototypes were flown by ALEF between 2016 and 2019, the flying car is yet to show a live demonstration of the vehicle actually flying. In fact, the top flying speed for the ALEF Model A has not yet been revealed. This suggests smaller-scale experiments are being made before a full public flight takes place.

Alef Model A Release Date

The FAA has granted special air-worthy certification for the Alef Model A to begin testing its flight capabilities. Alef Aeronautics plans to ship out the flying cars in 2026. However, the new flying car still needs to obtain approval from the National Highway Traffic Safety Administration before flying in public and being used on roads.

The Alef Model Z Release Date and Price

Alef Aeronautics is also developing a four to six-passenger version of the flying car called the Alef Model Z. They are aiming to release it in 2035 with a much lower price tag of $35,000. This low price is meant to increase the number of people flying cars in the future. Other flying cars, like the Doroni H1, have also placed competitive prices for their eVTOL (electric vehicle take off landing) vehicles.

Alef Model A vs Doroni H1

The Alef Model A is not the only flying car. The Doroni H1 is another eVTOL (electric vehicle take off landing) vehicle that is highly anticipated. While they both share some futuristic properties, they have some key differences:

- Alef Model A: Designed to look and feel like a traditional car. This flying car is made for extended journeys, with a focus on integration into existing roadways. It can drive on roads and then elevate to fly.

- Doroni H1: Resembles a more conventional small aircraft, with a distinct emphasis on its aerial capabilities. This eVTOL is Designed for shorter flights. It is made for people who want the experience of being their own pilot.

Functionality:

| Specifications | Alef Model A | Doroni H1 |

| Range on road | 200 miles | N/A |

| Range in air | N/A | 60 miles per trip |

| Top Speed in air | N/A | 120 mph |

| Capacity | 1 driver, 1 passenger | 1 pilot, 1 passenger |

| Price (approx.) | $300,000 | $150,000 |

Are Flying Cars the Future?

The flying car market is estimated to reach a value of $1.5 trillion by 2040. Interestingly enough, that is about as much as the current car market is worth today. Moreover, many flying car companies are revealing plans for eVTOL’s with affordable price tags. All signs seem to lead to the possibility that flying cars will soon be a big part of the future.

As suggested in the Alef model A video, you can expect to see emergency responders, the army, and perhaps even local police flying them around, because response time is crucial for them.

But what if everyone does have a flying car in the future? What would it take to make that possible, and what would happen to our planet?

Will Flying Cars be Autonomous?

After a certain number of cars are in the air, flying would have to be autonomous. This would be the only way to ensure the safety of fliers as the sky begins to fill up vehicles (imagine distracted flying for a moment… It’s quite different than distracted driving).

Autonomous flying cars in the future would have to use radar and lidar to detect everything around them because since you can’t have lane markings in the air, the sole use of cameras would be useless for the self-flying car to stay on course; in fact, there would be no course; the entire sky could be everyone’s flying space.

What Would Happen if Everyone Did Fly Cars?

But imagine for a moment that we made it work, and this all happened. What would happen to the roads?

Just recently, the first freeway system in the world was discovered in an ancient Mayan city that remained hidden for thousands of years underneath a jungle in Guatemala.

Nature begins to take over roads when they are not being used. Everything turns green and lush, habitats start to form, and if everyone is flying from one place to another in an electric car, then the view underneath becomes the sort of sight that you used to travel miles away from home just to see.

Could the future be one where technology and nature co-exist? Where so much green makes the air you breathe so clean, that it gives a whole new meaning to the term fresh air— and it is only because we’re literally in the air.

A motivational thought indeed, and perhaps only a dream. Maybe someone who comes back to the future can let me know.

Are you reading this, Doc? If so, get in touch on our socials below.

Technology

Free AI Weapon Detection App Can Prevent School Shootings

AI Weapon Detection

School shootings are sudden and horrific events. There have been 394 school shootings in North America since 1999. These events are marked by panic, chaos, confusion, and missing information. But now, AI weapon detection systems are being used to stop school shootings before they even happen. Some highly motivated companies are even offering these systems for free.

Iterate.ai Open Source Threat Detection

Iterate.ai is a tech company that is offering a free AI weapon detection system to help stop school shooters in their tracks. The AI has been trained to detect guns, knives, kevlar vests, and robbery masks.

Brian Sathianathan, Chief Technology Officer at Iterate.ai, says schools and universities need technology like this. Offering an open-source version of the system is the most effective way to get it into as many schools as possible.

Are you a Non-Profit That Could Use an AI Gun Weapon Detection System?

The GitHub code for the threat detection system is available on the iterate.ai website. This encourages software developers, UX designers, and AI engineers to make the app even better than it is. Iterate.ai wants to encourage non-profits like schools and religious institutions to use the system.

There is no single miracle answer to a complicated problem like school violence, but AI weapon detection systems could protect thousands of people.

How Does an AI Weapon Detection System Work?

The AI weapon detection system scans hundreds of items in a given space at a time. It then uses algorithms to instantly detect a gun or knife being carried into the area. When it catches something dangerous, it signals the authorities within seconds. It can even track the individual as they move throughout the building.

In past attacks, distressed survivors have provided police with contradicting and confused information. This can cost officers vital time in stopping the shooter. However, with AI weapon detection technology, police can enter into active situations with vital information already available to them. It is even possible for them to receive second-to-second updates about the suspect’s location and actions.

How Does AI Scan for Weapons?

Every AI gun detection system uses scanning software. By categorizing hundreds of items in a space, the AI weapon scanning software can zero in on threats. An AI is trained on data from videos and fictional scenarios that help it develop an understanding of what it’s looking for, and what a particularly dangerous situation looks like.

Iterate.ai weapon detection, for example, has been programmed to instantly recognize a variety of weapons. This includes handguns, semi-automatic weapons, bulletproof vests, and knives that are more than six inches long.

This particular AI was trained on 20,000 videos of robberies involving weapons over the last twenty years.

The Future of AI Weapon Detection Systems in School

There is no single miracle answer to a complicated problem like school shootings, but An AI gun detection system provides hope that many dangerous situations will be averted in the future. The future beyond 2024 looks bright, and now, it is more likely that we can send our children to school and see them return safely even if a bad situation does happen.

IC Inspiration

Arguably, one of the strongest voices in the endless struggle with school violence comes from a girl whose voice was silenced many years ago.

Rachel Joy Scott was the first person killed in the Columbine massacre. She was only 17 years old.

However, even amid such horror, something amazing arose.

There were things about Rachel that even her family didn’t know. She was a compassionate and generous person who had a dream to reach out to those who needed it the most.

In her diary, she wrote: “I want to reach out to those with special needs because they are often overlooked. I want to reach out to those who are new in school because they don’t have any friends yet. And I want to reach out to those who are picked on or put down by others.”

She didn’t just write these words, however. She lived them, too.

People began sharing wonderful stories about how she befriended those who were different. They told how she protected her classmates against bullies.

When she was thirteen, she traced her hands on the back of her dresser. On her picture, she wrote a message:

“These are the hands of Rachel Joy Scott and will someday touch millions of people’s hearts.”

Her dream to reach out to the world could have ended that day in the schoolyard at Columbine High School. Her family decided to make sure that didn’t happen.

Instead, her memory and her legacy are being shared through the non-profit organization called “Rachel’s Challenge.” The organization works with students and teachers at all levels. Speakers go out to schools to talk to teachers and students about how to end bullying and school violence. They encourage students to take up Rachel’s challenge. She developed This concept in an inspirational essay she wrote shortly before she passed away.

“I have this theory, that if one person can go out of their way to show compassion, then it will start a chain reaction of the same”

– Rachel Joy Scott

At each Rachel’s Challenge event, students and staff are encouraged to take up this challenge. They’re asked to put it into practice in their lives and their schools. Students are even encouraged to sign a pledge to give their school a fresh start. In some schools, clubs are founded based on the challenge. They’re called Friends of Rachel clubs.

People are not limited to in-person visits, however. The Rachel’s Challenge website encourages visitors to sign the pledge online.

Rachel’s challenge has seen great success. Thirty million students, educators, and parents have been reached. Through this challenging and important work, an average of 150 lives are saved every year. Rachel’s Challenge even reports at least eight school shootings have been averted.

Of all the recent innovations in Artificial Intelligence, perhaps the one thing that will finally make school shootings a thing of the past is just a little bit of simple compassion.

Rachel sure thought so.

“My codes may seem like a fantasy that can never be reached but test them for yourself, and see the kind of effect they have in the lives of people around you. You just may start a chain reaction.”

-

Science2 years ago

Science2 years agoThe Largest Body of Water in the Universe is Floating in Space. Can We Use It?

-

Technology2 years ago

Technology2 years agoThe Humane AI Pin is Here! So is Everything You Need to Know

-

Healthcare2 years ago

Healthcare2 years ago3D Printing in Hospitals Has Saved Children’s Lives

-

Technology2 years ago

Technology2 years agoAlef Model A Flying Car Pre-Orders Surge to a Whopping 2,850

-

Technology2 years ago

Technology2 years agoMichelin Uptis: Airless Car Tires Emerging in 2024

-

Sustainability3 years ago

Sustainability3 years agoTeamSeas Uses Gigantic Robot to Battle Plastic Pollution

-

Sustainability2 years ago

Sustainability2 years agoArchangel Ancient Tree Archive: Cloning Ancient Trees to Build Strong Forests

-

Technology3 years ago

Technology3 years agoNew Atmospheric Water Generator Can Save Millions of Lives

Prijava

7 April 2024 at 4:35 am

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

Adam Hamadiya

7 June 2024 at 1:46 pm

Thank you for reading. Very happy to hear you enjoy our articles. We do this for you!