Science

This Incredible Study of The Brain May Help Cure Alzheimer’s

Published

2 years agoon

By

Carlos Curti

We’ve got some amazing neuroscience news for you! A new study of the brain has led scientists down a path to better understanding the early signs and effective treatment for Alzheimer’s disease, and believe it or not, it all started by them studying our brains internal compass.

Have you ever found yourself exploring a new part of town and suddenly losing track of which way to go? It happens to the best of us. But fear not because our brains have this amazing feature called the internal compass that helps us find our way, just like a magical guide showing us the right path.

The researchers wanted to gain a better understanding of how visual information affects our internal compass. As virtual reality technology starts to gain more and more traction, this research can be extremely valuable in giving us a better understanding of the effects it may have on us.

In order to dive deeper into the effects of virtual experiences and particularly how they may make us feel disoriented, the scientists took mice on a virtual adventure. They exposed them to a special virtual world that made the mice feel a bit disoriented, and while the mice went exploring, the researchers tracked the activity in their brains.

What they discovered was a phenomenon they called “network gain.” Network gain is like a reset button that quickly helps us get back on track when we’re feeling confused. Imagine that! Our brains have a secret mechanism to reorient themselves and save the day in puzzling situations, eventually consolidating our sense of direction.

For some dedicated researchers, the virtual world isn’t just a game—it’s a scientific puzzle waiting to be solved.

The scientists are convinced that better understanding our internal compass and navigation system could lead to improved outcomes for individuals affected by Alzheimer’s disease because the symptoms of Alzheimer’s disease include feeling lost and disoriented.

Through the research, scientists are now further studying the significant implications for the disease, particularly how we can detect its early signs and produce effective treatments for it.

These incredible findings have sparked the curiosity of scientists who are currently developing new models to dig deeper into how all these brain mechanisms work together. They are on a mission to help those with Alzheimer’s by continuing to unlock the secrets of our brain’s internal compass; as if working on a roadmap to a brighter future.

IC INSPIRATION

Have you ever pondered the incredible complexity of our brains? It’s truly amazing to consider that it might be the most intricate thing in the entire universe. No wonder humanity is endlessly fascinated by the quest to understand and unravel its mysteries.

In the United States alone, approximately 6.5 million people are currently grappling with Alzheimer’s disease, and worldwide, that number is estimated to be around 55 million. As technology advances, the study of the brain becomes more and more important. Just imagine the potential if we could find a way to effectively treat this devastating condition that currently lacks a cure.

Neuroscience has come a long way, thanks to amazing advancements in technology. Scientists like Mark Brandon and Zaki Ajabi from McGill University and Harvard University have been using cutting-edge tools to explore questions that were once unimaginable, giving us a sense of direction by studying our literal sense of direction.

It’s like they’re pushing the boundaries of what we thought was possible.

Thanks to the ongoing research of these people, there is hope that someday very soon, mental illnesses will become relics of the past, much in the same way that life-long paralysis from nerve injuries will. We may live in a future where these things no longer hold sway over our lives, leading us to a happier and more fulfilling existence.

The possibilities are truly awe-inspiring, and it is through dedicated scientific exploration that we inch closer to achieving this remarkable goal.

Carlos is a content developer with a background in communications and business management. He is experienced in journalistic research and writing, as well as content creation, such as video, audio, photography, and script.

You may like

Science

Dire Wolf De-extinction: The Science Behind it and What’s Coming Back Next

Published

2 months agoon

20 May 2025

Dire Wolf De-extinction

For the first time in human history, an extinct, prehistoric animal, the large and beautiful dire wolf, has been brought back to life!

The dire wolf went extinct nearly 10,000 years ago.

Two male Dire Wolf cubs, Romulus and Remus, were born in October or 2024. Khaleesi, a female cub, followed in January of 2025. These little pups are undeniable proof of the viability of new de-extinction technology developed by biotech company, Colossal BioSciences.

The idea has caused both excitement and alarm. It raises exciting possibilities for the protection and restoration of our damaged ecosystems, but the thought also conjures up terrifying Jurassic Park style scenes of rampaging dinosaurs causing havoc and destruction.

We are at an amazing crossroads in human history. We have this incredible new technique, but where do we go from here? I’m reminded by a quote from Dr. Ian Malcolm from the movie Jurassic Park that goes:

“Scientists were so preoccupied with whether or not they could that they didn’t stop to think if they should”

Now might be our time to ask that very same question.

So, did they really bring back the dire wolf? How exactly does de-extinction technology work? What’s next on the de-extinction list, and is bringing back extinct species a good or a bad idea? We’ve got the full science scoop right here.

Did They Really Bring The Dire Wolf Back to Life?

The dire wolf has been brought back to life… sort of. The wolves would more accurately be described as gray wolf pups with some dire wolf lineage. Of course, that’s a pretty amazing thing all by itself, considering there hasn’t been a living, breathing Dire Wolf to pass on anything for over 10,000 years.

Recently, Beth Shapiro, Colossal’s chief science officer, told CNN, “We aren’t trying to bring something back that’s 100% genetically identical to another species. Our goal with de-extinction is always create functional copies of these extinct species.”

But Why?

The team at Colossal BioSciences recognizes the positive contributions this long-lost creature could make to our damaged and failing ecosystems today.

How Exactly Was The Dire Wolf Brought Back?

The dire wolf was brought back using gene editing. Scientists extracted DNA from two ancient fossils and identified the gray wolf as its closest living relative. They edited gray wolf embryos by inserting dire wolf DNA, then implanted them into wolves with the purest genomes. The result: living pups with dire wolf traits.

The problem with species that have vanished is that there are no longer any around to produce the fundamentals of procreation: Sperm and ova. Without these, there can be no baby, right?

Well, scientists recognize another option. Sometimes, even species that haven’t been around for millennia leave something important behind: bone fragments, preserved tissue, or even microscopic strands of DNA. Even a tiny piece of DNA is sufficient to get the process started because DNA holds all the information needed to build a living organism from scratch.

Scientists can use strings of DNA to bring new life into the world—what some would call, bringing back the dire wolf, but first, it has to be integrated with the extinct animal’s closest living relative.

In the case of the Dire Wolf, scientists recognized the Gray Wolf as the best choice. Here’s a more detailed breakdown of the process.

How The Extinct Dire Wolf Was Brought Back: Step-by-Step

| Step | Summary |

|---|---|

| 1. DNA Discovery | DNA extracted from two fossils: a 13,000-year-old tooth and a 72,000-year-old inner ear bone. |

| 2. Closest Relative | Gray wolf identified as the best match to carry dire wolf DNA. |

| 3. Genome Screening | Only four wolves found with low enough dog DNA to qualify as surrogates. |

| 4. Gene Editing | Dire wolf DNA traits inserted into gray wolf embryos through gene editing. |

| 5. Implantation | Edited embryos implanted into gray wolves to give birth to hybrid pups. |

| Result | Living pups with dire wolf traits—reviving the species in hybrid form. |

1. Finding Dire Wolf DNA

Finding DNA from creatures who are long gone is never easy. However, the Dire Wolf posed an unusual puzzle.

A great many Dire Wolf fossils have been found across North and South America, including over 4000 pulled from California’s La Brea Tar pits. Yet, the scientific community has found it exceedingly difficult to find any specimens that contain enough intact DNA to be viable. In fact, an international team of scientists recovered only 0.1% of the Dire Wolf genome after studying 46 fossil specimens.

Colossal came into possession of the two most likely candidates. Both found in Ohio, the specimens included a 13,000-year-old tooth and a 72,000-year-old inner ear bone. From these two tiny fragments, Colossal was able to extract enough DNA to do the impossible. Using their own unique processes, the Colossal team was able to extract significantly more DNA than ever before.

2. Finding the Surrogate Mother

Although they are classified as an endangered species, there are an estimated 250,000 Grey Wolves living today. So, there should be nothing to finding a suitable female to host the dire wolf cubs, should there?

The challenge that the Colossal team ran into was finding a Gray Wolf individual who had the purest DNA possible. However, nearly all wolves across the world have some domestic dog DNA in their genome. The more domestic dog DNA in a wolf, the less likely the wolf is to be a successful candidate for surrogacy.

So, the Colossal team got to work analyzing genome sequences of over two dozen wolves across the US. They compared that data to the DNA of wolves and wild and domestic dogs from around the world. This process, known as gene sequencing, allows scientists to understand where the two species are similar, where they differ, and what traits will need to be isolated to create a viable hybrid animal.

Through this process, they were able to track down only four animals with a low enough percentage of domestic dog DNA to be candidates.

3. Putting it All Together to Bring Back Dire Wolves

Now they had the most complete Dire Wolf DNA that could be found. They also had a small group of living wolves who had the best possible DNA match for their prehistoric cousins. The next challenge was putting that all together to create the pups.

This process is called gene editing. It involves taking the desired traits from the Dire Wolf DNA and inserting them into the Gray Wolf DNA. This newly combined string of DNA is inserted into the nucleus of a Gray Wolf ova or egg. This egg is united with Gray Wolf sperm, then artificially implanted into a living wolf’s womb.

The true result is a living Gray Wolf pup with Dire Wolf traits.

The question everyone is asking, though, is why this whole thing was necessary in the first place.

The Ethics Question: They Can, but Should They?

Should We Be Bringing Back Extinct Species?

A few years ago, the questions was whether we CAN use DNA of long-extinct species and revive them in living ones. Well, Colossal Biosciences did it, they successfully bred a gray wolf that was part dire wolf.

The new cubs have the white fluffy fur of their ancient ancestors and are expected to achieve their great size; However, far from a hero’s welcome, the little cubs have instead sparked a barrage of questions and debate. The new question is whether we should be “bringing back” extinct species. Here’s a few reasons for and against it.

Why De-Extinction is a Good Idea

The world’s ecosystems are in trouble, but there’s great hope. From building the World’s First Human Sentinel Underwater Habitat, to creating genius plastic alternatives, inspiring people from across the globe are finding ways to create a resilient future humanity.

Colossal BioSciences are doing the same in their own way. They believes that de-extinction technology can:

- Help create more biodiversity

- Help current conservation management systems

- Improve technology in human healthcare

- Make breakthroughs in synthetic biology

Colossal Biosciences says it’s bringing back the wooly mammoth next.

The World has felt the absence of the Woolly Mammoth in surprising ways. One of these ways would be that the perpetually frozen earth of the Canadian and Siberian tundra has begun to melt. Without mammoths to scrape away snow in search of buried grasses, the permafrost has become too insulated.

As the permafrost recedes, it can release massive quantities of carbon dioxide and methane into the air, all of which are elements that can accelerate global warming.

Bringing Back The Wooly Mammoth is Next

The plan to bring back the wooly mammoth is to combine the DNA of mammoth fossils with an Asian Elephant, creating a hybrid with key traits of a Mammoth. The resulting hybrid would have the thick, woolly coat and extra layers of fat that would allow it to survive the frozen temperatures of the north.

Some scientists believe this new mammoth hybrid would keep the grasslands healthier through its grazing activities. Old grass would be eaten away, leaving fresh grass to keep erosion in check with its roots. It would also scrape away the snow, allowing the cold winter air to reach through and preserve the critical permafrost layer.

If there’s one other thing that might make de-extinction a good idea, it’s this:

When a population of animals shrinks away, the biodiversity pool shrinks with them. Inbreeding has caused many of today’s animals to be born without the genes that help them fight disease. Finding these genes in ancient animals and editing them into living ones could mean the difference between extinction and survival.

However, there are some critics of de-extinction technology too.

The Difficulties in Bringing Back The Woolly Mammoth

Creating a hybrid animal from ancient DNA is one thing, but raising them and then finding a place for them in the wild is something else altogether. This is one of the primary arguments against going forward with this project.

Joseph R. Bennett, an ecologist at Carleton University in Ottawa, has been performing a study on de-extinction technology with his team. The scientists are curious about the implications of bringing back extinct species like the dire wolf and wooly mammoth. Bennett reports three major problems.

DNA Degradation and Costs

One or two hybrid mammoths would only live so long. As well, smaller populations will be forced to procreate either amongst themselves or other species such as the Asian elephant. This can result in DNA degradation in the first generation. It would be extremely costly not just to develop the hybrids, but to provide staff to manage and monitor them.

Finding a Livable Space Might Be Difficult

The hybrid woolly mammoths would need lots of roaming space to accomplish ecosystem regeneration. However, the Arctic land, which once hosted the vanished Mammoth Steppe, is a changed place.

Now peppered with roads and towns, it could be much more difficult for a population of large animals to find space. As well, the local flora and fauna have also seen tremendous changes over the years. It’s questionable to sustain a herd of woolly mammoths if they were brought back.

Bringing Back Extinct Species Can Harm Endangered Ones

The Carleton Study compared several recently extinct species with similar living species with conservation plans. Using this data, they were able to estimate the potential costs of resurrecting lost species, and the results were alarming.

The money to monitor and manage any resurrected species might need to come out of funding for protecting existing endangered species. The study found that many de-extincted animals would either do no good to the ecosystem. Others could cause harm to as many as 14 other species.

The Future of De-Extinction Technology

Colossal BioSciences certainly shows every sign of forging full speed ahead. There are plans to create hybrids using woolly mammoth, dodo bird, and thylacine DNA in the next few years.

After bringing back dire wolves, Colossal biosciences is moving forward with their plans to bring back the wooly mammoth. In 2024, they even created a mouse with the shaggy fur of a Woolly Mammoth.

Around the same time, they also edited the genes of Australian marsupials to resist the poison of the invasive Cane Toad.

While the world waits to see what comes next, the sky is filled with a distant howl. It’s the cry of a little dire wolf cub whose very existence proves that, sometimes, the impossible isn’t so impossible after all.

IC Inspiration

While Colossal BioSciences is aiming for big solutions to our environmental problems, others are choosing smaller but equally wonderful methods.

Australia has faced some pretty trying circumstances lately. From 2017 to 2020, they were hit by the worst drought they’d seen in over a century. Referred to as the Tinderbox Drought, it threatened many major water supplies.

Then, in 2020, as much as 19 million hectares were destroyed by wild bushfires that ripped through the Australian wilderness. By the time it was done, an estimated 1.25 billion animals had been killed.

Among those animals that were hard hit were the Pygmy Possums. These little mouse-like marsupials have big, dark eyes like something on a cartoon character. At only 40 grams, they’re tiny enough to cling to the average adult’s thumb.

When the drought overtook the country, the Bogong moth was hit hard by the lack of moisture. With fewer moths to lay eggs, their larvae, which are a staple food of the possums, also disappeared. Without its favourite food, the population numbers started to decline dramatically. At the lowest point, only about 700 of these tiny creatures were left.

The staff of the Melbourne Zoo came up with an idea. They created the Bogong Biscuit, a little cookie made of macadamias, mealworm, and various oils. This combination adequately replaces the nutritional content of the larvae.

Then they enlisted the help of several schools in the mountain towns near the possums’ natural habitat. The children at these schools spent two years making the biscuits and feeding them to the wee creatures. Thanks to their efforts, the possum population has rebounded to numbers expected before the fires. At last count, there were nearly 1000 individuals.

It wasn’t a mind-blowing miracle of science. Just a few classrooms full of kids and a recipe that called for three basic ingredients. Yet, it made all the difference in the world for these little animals.

Maybe not everything needs to be done on a mammoth scale, after all.

Science

10 Facts About Stars That Will Absolutely Blow Your Mind

Published

8 months agoon

31 October 2024

10 Amazing Facts About Stars

I’ll argue that the biggest mystery is not what was, or what will be—it’s what is.

For thousands of years people have looked at the sky and asked that very question—it’s even in one of the world’s most famous lullabies.

Because that’s what stars do: they fill us with awe and intrigue. They make us wonder about the nature of the universe and ourselves, and although we might not have all our questions answered, we still feel hope and inspiration when we look up… Almost as though being here is enough.

Well, we don’t have all the answers for you, but we’ve got some, and their sure to leave you with the same curiosity that science never fails to deliver. At the very least, these 10 amazing facts about stars will make you the most interesting person in the room.

Oh, and they might also blow your mind.

1. Almost All Matter in The Universe Comes From Stars

The oxygen you breathe in, the calcium that strengthens your bones, and even the nitrogen that forms your DNA—they were all formed in stars long before galaxies even existed.

Stars spend their entire life building elements within themselves, then when they reach the end of their life, they explode and scatter the elements throughout space.

These elements are responsible for creating matter (anything that’s physical).

The only known elements that were not formed in stars are Hydrogen, Helium, and Lithium. These three elements were formed minutes after the big bang, long before stars.

2. Planets Are Born from Stars—and Depend on Them. The Ones That Don’t, Go Rogue

Planets are created from the leftover gas and dust in a spinning cloud that surrounds young stars.

Incredibly, there are around 100 billion stars in the galaxy, and it’s likely that for every star there are one or more planets. This means that there are more planets than stars, which makes sense because planets sometimes orbit stars—just like the Earth orbits the Sun.

Do Planets Orbit Stars?

It’s a common misconception that planets orbit stars, but they don’t. Planets orbit around the point where the mass between them and another object is balanced enough to allow for an orbit. Sometimes, that object just happens to be a star, but it can also be other celestial bodies. This point of mass is called the barrycenter.

Rouge Planets

Planets Can Be Players Too

Planets that aren’t bound to a star will not be in an orbit; therefor, they will float aimlessly around space. These planets are called rogue planets.

Some of these loner planets may have been part of a planetary system once, but for whatever reason, they were ejected from their orbit (or kicked out if you’re feeling comedic).

We’re not really certain why planets go rogue, but an idea is that other stars who are in close proximity can pull a planet off it’s orbit with it’s strong gravitational pull (or prowess, if you want to keep the comedy going).

What’s The Deal with Rouge Planets?

Imagine being a planet who is part of a planetary system.

For millions of years, you’re dancing in an orbit around your star—the light of you’re life. Then one day, another star with bright red and orange colors comes by and pulls you away from your orbit, and just when you think you’re about to enter a dance with this new star, you end floating aimlessly into space.

The first star won’t have you back, and it would it seem that the newest star never wanted to tango in the first place.

Now, everybody calls you a loner and a nomad. But you know what? It doesn’t matter, because although you’re not in an orbit with any particular star, you still interact with other celestial bodies you pass by; in fact, sometimes the gravitational force from these bodies changes your direction and keep you moving into different places (or spaces)—your just not tied down to any particular one.

Yes, you are the rouge planet.

3. You Can Never Actually See A Star; You Can Only See The Light They Give Off

One of the most interesting facts about stars is that we don’t actually see them.

It’s easy to think that you are seeing a star when you look up into the night sky, but don’t be fooled—what you are really looking at is the light that stars give off.

In reality, stars are too far away to see with your naked eye, and even if you were to look through a telescope, you are not actually seeing the sun, moon, or any other celestial object—all you are seeing is their light.

You can only see objects that light has reached the surface of. For example, If you can view Mars with a telescope, then it is only because the light reflected from Mars has reached the distance your telescope can show you. In reality, Mars is way too far to see with your naked eye.

A light year is the distance light travels in one year. The stars you see when you look up at the night sky are about 1000 light years away; therefor, they take about 1000 years to reach the Earth, and when they do, they reach your eyes.

But space is a huge place, and some stars are much further than that.

4. The light From Some Stars Travel For Billions of Years and Still Haven’t Reached Us

Light has a speed of 186,000 miles per second.

To put that into perspective, light can travel from the Earth to the Moon in 1.28 seconds, and in that same amount of time, it could travel back and forth between New York and Los Angeles 36 times!

There are stars in deep space—not within our galaxy—that are so far away, that their light has not reached the Earth yet.

If you’re ever feeling down just remember: a star couldn’t reach you by itself, so it sent off its light to travel for thousands of years—just to give you motivation and wonder when you need it most.

5. When You Look at a Star, You Are Looking at The Past

Let’s say that you go outside and begin to look at a star in the night sky.

Since you’ve read our 10 interesting facts about stars, you know that you are only seeing the light of that star, and not the star itself.

If you can only see the light that a star gives off, and it takes a thousand years for that light to reach the Earth, then you are actually seeing that star as it was 1000 years ago.

For you to see what that star looks like right now, you’d need to wait another thousand years—because the light it’s emitting right now would take another thousand years to reach you.

Its too bad we’re not elves.

6. It’s Theoretically Possible That Some Stars You See Might Not Exist Anymore

Some stars in deep space are millions of light years away, meaning that it will take millions of years for their light to reach the point where you can see them with a telescope.

Stars typically live for a few million years, and If some stars sent out their light a few million years ago, it’s theoretically possible that some of these stars have died and aren’t there anymore. Why?

Because the light has already left the star and is travelling into space, but the star is still in its orbit in a galaxy far far away (unless the poor sucker went rouge).

The light and the star are two independent things. So, you can be looking at the light of the star, but for all you know, that star might have died.

But although it may be gone, you are still able to look at its light—it gives you inspiration and leaves you in wonder for your entire life.

| Star Size | Lifespan |

| Massive Stars | A few million years |

| Medium-sized Stars | Approx. 10 billion years |

| Small Stars | Tens to hundreds of billions of years |

8. Stars Are One of The Few Things in Existence That Give Off Their Own Light

Planets, moons, asteroids, and even most living things don’t produce light on their own; they reflect light from celestial objects that give off light—like stars.

In other word’s, you can only see other objects largely because stars exist. Without light from stars, your eyes would never be able to capture these objects (or people or thing’s).

Here’s a bonus to go with our 10 facts about stars:

the only reason we can see anything on Earth is because light reflects off objects and into our eyes, and before we invented light bulbs, most of that light came from stars.

Other than infrared and thermal radiation—which can only be seen with some cameras—we as human beings don’t even produce our own light.

9. Stars Are Constantly Battling Gravity, and Gravity Always Wins (Thankfully)

Stars are in a constant battle with gravity throughout their lives.

The core of a star burns hydrogen, and this fuel keeps the star stable by generating an outward pressure. At the same time, gravity is always trying to crush the star by pulling matter inward—creating inward pressure.

Eventually, the star runs out of energy and gives into the pressure where it is swallowed by gravity and implodes.

This explosion spreads elements throughout the galaxy, and elements were responsible for the creation of all matter.

We’re right back at square one and it feels encouraging to know:

a star literally gave its life for you to be here right now.

10. The Final Fact About Stars: A Star Created the Largest Ocean In The Universe—and it’s Floating In Space

The largest body of water in the universe is 140 trillion times the size of all of Earth’s oceans combined, and it’s floating in space around a quasar.

What does this have to do with stars?

Sometimes when stars explode, they create a region in space where gravity is so strong that nothing—not even light—can escape it. This is called a black hole—a term you’re probably familiar with.

The largest body of water in the universe is surrounding a type of black hole called a quasar and it’s moving through space at this very moment.

Anyone up for some space polo?

If Light Cannot Escape a Black Hole, Then How Do We See it?

Nothing can escape a black hole, not even light.

This means that black holes neither produce their own light nor can they reflect it; however, we can see black holes from the light that surrounds it.

This is exactly what happened in 2019 when the first image of a black hole was captured in a galaxy 53.49 million light years away (Galaxy M87).

You notice how the red colour looks as though it’s moving? That’s because the gravitational force of the black hole is bending the light passing near it. Yes, you can even see it through a picture, or a picture of a picture.

In this way, we are able to view black holes because of the lights around it.

IC Inspiration

There are so many cool things about stars, but the most amazing is that although they give off a finite amount of light, they still manage to give an infinite amount of knowledge and wisdom.

If I had to make the comparison, knowledge is like the light that stars shoot out, and wisdom is the star itself.

The pursuit of knowledge gives everything a visual—just like the light from a star allows us to see those visuals.

In knowledge, there is always another thing to learn—just like there is always another object that light touches.

But have you ever noticed that every time you see a particular thing, you see another thing with it? You can’t really see a single object, there’s always something on the periphery too.

Just like every time you learn something, there is something else to learn that may not seem as part of it, but is actually connected to it.

Knowledge searches for answers and all it finds is questions, but wisdom is quite different.

Wisdom searches for questions and all it finds is answers.

In time, knowledge becomes wisdom like stars become life, and I would argue that if the universe is infinite, then what we can know is also infinite.

And if the universe is finite, then it’s possible for humanity to get to the point where we have all the answers.

But What Point Am I Trying to Make?

Whatever the universe is, that’s what we are, because the universe is everything; therefor, it’s us too. Another way of saying it is that we are the universe. It becomes easy to see why our eyes take the shape of planets.

Stars tell a story that we come from the universe. It might even be possible that we come to know whether the universe is finite or not through knowing stars, and when we do, we might have another question to ask…

By minds much wiser with time that has passed.

Science

Commercial Hypersonic Travel Can Have You Flying 13,000 Miles In 10 Minutes!

Published

1 year agoon

6 June 2024

If engineers start up a hypersonic engine at the University of Central Florida (UCF) and you’re not around to hear it, does it make a sound?

Hypersonic travel is anything that travels by at least 5x more than the speed of sound. A team of aerospace engineers at UCF have created the first stable hypersonic engine, and it can have you travelling across the world at 13,000 miles per hour!

Compared to the 575 mph a typical jet flies, commercial hypersonic travel is a first-class trade-off anybody would be willing to make.

In fact, a flight from Tampa, FL to California would take nearly 5 hours on a typical commercial jet; whereas, with a commercial hypersonic aircraft, it will only take 10 minutes.

So here’s the question: When can we expect commercial hypersonic air flights?

When we stop combusting engines and start detonating them! With a little background information, you’ll be shocked to know why.

Challenges and Limitations of Commercial Hypersonic Travel

The challenge with commercial hypersonic air travel is that maintaining combustion to keep the movement of an aircraft going in a stable way becomes difficult. The difficulty comes from both the combustion and aerodynamics that happens in such high speeds.

What Engineering Challenges Arise in Controlling and Stabilizing Hypersonic Aircraft at Such High Speeds?

Combustion is the process of burning fuel. It happens when fuel mixes with air, creating a reaction that releases energy in the form of heat. This mixture of air and fuel create combustion, and combustion is what generates the thrust needed for the movement of most vehicles.

But hypersonic vehicles are quite different. A combustion engine is not very efficient for vehicles to achieve stable hypersonic speeds. For a hypersonic aircraft to fly commercially, a detonation engine is needed.

Detonation can thrust vehicles into much higher speeds than combustion, so creating a detonation engine is important for commercial hypersonic air travel. Detonation engines were thought of as impossible for a very long time, not because you couldn’t create them, but because stabilizing them is difficult.

On one hand, detonation can greatly speed up a vehicle or aircraft, but on the other hand, both the power and the speed it creates makes stabilizing the engine even harder.

How Do Aerodynamic Forces Impact the Design and Operation of Hypersonic Vehicles?

Aerodynamics relates to the motion of air around an object—in this case, an aircraft. As you can imagine, friction between an aircraft and the air it travels through generates a tremendous amount of heat. The faster the vehicle, the more heat created.

Commercial hypersonic vehicles must be able to manage the heat created at hypersonic speeds to keep from being damaged altogether.

Hypersonic aircraft do exist, but only in experimental forms such as in military application. NASA’s Hyper-X program develops some of these vehicles, one of which is the X-43A which could handle hypersonic speeds of Mach 6.8 (6.8x faster than the speed of sound).

| Mach Number Range | Name | |

| 1.0 Mach | Sonic | Exactly the seed of sound. |

| 1.2-5 Mach | Supersonic | Faster than the speed of sound, characterized by shock waves. |

| >5.0 | Hypersonic | More than 5x speed of sound, with extreme aerodynamic heating. |

But vehicles for commercial hypersonic air travel is still a work in progress

Engineers say that we will have these vehicles by 2050, but it may even be sooner that that. Here’s why.

Future Prospects and Developments in Hypersonic Travel

The worlds first stable hypersonic engine was created back in 2020 by a team of aerospace engineers at UCF, and they have continued to refine the technology since. This work is revolutionizing hypersonic technology in a way that had been thought of as impossible just a few years ago.

To create a stable engine for commercial hypersonic air travel, an engine must first be created that can handle detonation, but not only that, this engine must actually create more detonations while controlling.

This is because in order to achieve hypersonic speeds and then keep it at that level, there needs to be repeated detonations thrusting the vehicle forward.

The development at UCF did just that. They created a Rotating Detonation Engine (RDE) called the HyperReact.

What Technological Advancements are Driving the Development of Commercial Hypersonic Travel?

When combustion happens, a large amount of energy creates a high-pressure wave known as a shockwave. This compression creates higher pressure and temperatures which inject fuel into the air stream. This mixture of air and fuel create combustion, and combustion is what generates the thrust needed for a vehicles movement.

Rotating Detonation Engines (RDEs) are quite different. The shockwave generated from the detonation are carried to the “test” section of the HyperReact where the wave repeatedly triggers detonations faster than the speed of sound (picture Wile E. Coyote lighting up his rocket to catch up to Road Runner).

Theoretically, this engine can allow for hypersonic air travel at speeds of up to 17 Mach (17x the speed of sound).

Hypersonic technology with the development of the Rotating Detonating Engine will pave the way for commercial hypersonic air travel. But even before that happens, RED engines will be used for space launches and eventually space exploration.

NASA has already begun testing 3D-printed Rotating Detonating Rocket Engines (RDRE) in 2024.

How Soon Can We Expect Commercial Hypersonic Travel to Become a Reality?

Since we now have the worlds first stable hypersonic engine, the worlds first commercial hypersonic flight won’t be far off. Professor Kareem Ahmed, UCF professor and team lead of the experimental HyperReact prototype, say’s its very likely we will have commercial hypersonic travel by 2050.

Its important to note that hypersonic air flight has happened before, but only in experimental form. NASA’s X-43A aircraft flew for nearly 8,000 miles at Mach 10 levels. The difference is that the X-43A flew on scramjets and not Rotating Detonation Engines (RDEs).

Scramjets are combustion engines also capable of hypersonic speeds but, which are less efficient than Rotating Detonation Engines (RDEs) because they rely on combustion, not continuous detonation.

This makes RDE’s the better choice for commercial hypersonic travel, and it explains why NASA has been testing them for space launches.

One thing is certain:

We can shoot for the stars but that shot needs to be made here on Earth… If we can land on the moon, we’ll probably have commercial hypersonic travel soon.

IC INSPIRATION

The first successful aviation flight took place 26 years after the first patented aviation engine was created; and the first successful spaceflight happened 35 years after the first successful rocket launch.

If the world’s first stable hypersonic engine was created in 2020, how long after until we have the world’s first Mach 5+ commercial flight?

| 1876-1903 | Nicolaus Otto developed the four-stroke combustible engine in 1876 that became the basis for the Wright brothers performing the first flight ever in 1903. |

| 1926-1961 | Robert H. Goddard’s first successful rocket launch in 1926 paved way for the first human spaceflight by Yuri Gagarin in 1961 |

| 2020-2050 | The first stable RDE was created in 2020 and history is in the making! |

Shout out to Professor Kareem Ahmed and his team at UCF. They’ve set the precedent for history in the making.

Imagine travelling overseas without the long flight and difficult hauls, or RDREs so great, they reduce costs and increase the efficiency of space travel. When time seems to be moving fast; hypersonic speeds is something I think everyone can get behind.

Would you like to know about some more amazing discoveries? Check out the largest ocean in the universe!

The Largest Body of Water in the Universe is Floating in Space. Can We Use It?

The Humane AI Pin is Here! So is Everything You Need to Know

3D Printing in Hospitals Has Saved Children’s Lives

Alef Model A Flying Car Pre-Orders Surge to a Whopping 2,850

Michelin Uptis: Airless Car Tires Emerging in 2024

Dire Wolf De-extinction: The Science Behind it and What’s Coming Back Next

How Do I Become an Entrepreneur? The 5-Step Guide No One Tells You About

10 Facts About Stars That Will Absolutely Blow Your Mind

January Brain Exists, But You Can Beat the Winter Blues. Here’s How

Upside-Down Trains? Why We Should Be Seeing More of Them!

Trending

-

Science2 years ago

Science2 years agoThe Largest Body of Water in the Universe is Floating in Space. Can We Use It?

-

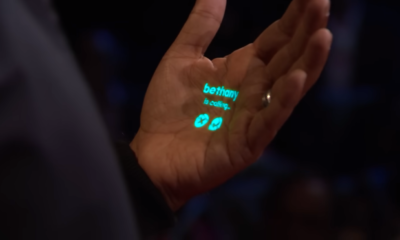

Technology2 years ago

Technology2 years agoThe Humane AI Pin is Here! So is Everything You Need to Know

-

Healthcare2 years ago

Healthcare2 years ago3D Printing in Hospitals Has Saved Children’s Lives

-

Technology1 year ago

Technology1 year agoAlef Model A Flying Car Pre-Orders Surge to a Whopping 2,850

-

Technology2 years ago

Technology2 years agoMichelin Uptis: Airless Car Tires Emerging in 2024

-

Sustainability2 years ago

Sustainability2 years agoTeamSeas Uses Gigantic Robot to Battle Plastic Pollution

-

Technology2 years ago

Technology2 years agoNew Atmospheric Water Generator Can Save Millions of Lives

-

Sustainability1 year ago

Sustainability1 year agoArchangel Ancient Tree Archive: Cloning Ancient Trees to Build Strong Forests